So we were having dinner with some friends last night and the topic of blackouts in Texas came up. It quickly became clear that people had not just an ideological slant to what they believed, but that it was so bad that you wouldn't believe they were talking about the same situation. One one side, it was because the windmills froze. On the other, the windmills didn't matter because they were only 14% of the total, it was because Texans were gun-toting cowboys who had to do things their own way and refused to listen to the real experts.

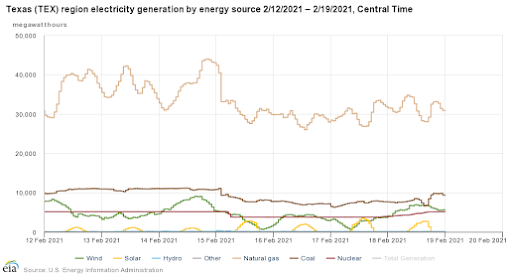

So let's go to the EIA (the federal Energy Information Agency) and see what actually happened with energy generation in Texas:

The tan line across the top is natural gas-fired generation. Brown is coal, red is nuclear, and the green line is wind.First and most obvious point: on 15 Feb every significant form of generation took a hit. Gas dropped 10 GWh, coal and nuclear dropped a notch, and wind went from varying between 5 and 10 to varying between 0 and 5.

That's just how ice storms work: they freeze windmills; they freeze up the big piles of coal that are the reserves at those plants; they freeze up valves in gas pipelines and even safety sensors at nuclear plants. Furthermore, a huge unexpected cold snap diverts natural gas for heating, and there simply wasn't enough of it.

Unexpected? Indeed. Less than a month before, the forecast for Texas had been unseasonably hot:

This was from the Weather Channel, but they were using NOAA Climate Prediction Center numbers.

What actually happened? When push came to shove, Texas generating capacity got reduced by the storm and maxed out at well less than demand. They had no excess capacity to fall back on. The deep question here is why not?

This essay by a retired electric utility planning engineer sheds some light on the subject. Most of the country has utility markets where people pay both for energy delivered and capacity. You have to pay for capacity because it costs money to build the extra plants, the extra pipelines and storage facilities, and so forth. No money, no extra capacity to use in emergencies such as ice storms.

Most of the country has markets for energy actually used but also for capacity. It's like paying for seatbelts and airbags in your car. You hope not to have to use them, but...

But in Texas, there is no capacity market. It's pay for actual delivered energy only. The main reason is politics; the wind generation sector in Texas, most famously T. Boone Pickens but plenty more, has huge clout there. Texas generates more electricity from wind than any other state, even California, and indeed more than almost any country.

But, and this is the crucial fact, wind has no excess capacity. Look at the graph again: from 14 to 15 Feb. wind power dropped from 10 GWh to 0. Not only did half the windmills freeze, but the wind stopped blowing. A capacity market in Texas would have made wind significantly more expensive, and was thus politically untenable.

So Texas went with the delivered energy only market, specifically to help wind. Which worked. And when times are good, is cheaper, like a car with no airbags. But when the ice came, Texas had neither belt nor suspenders--and got caught with its pants down.